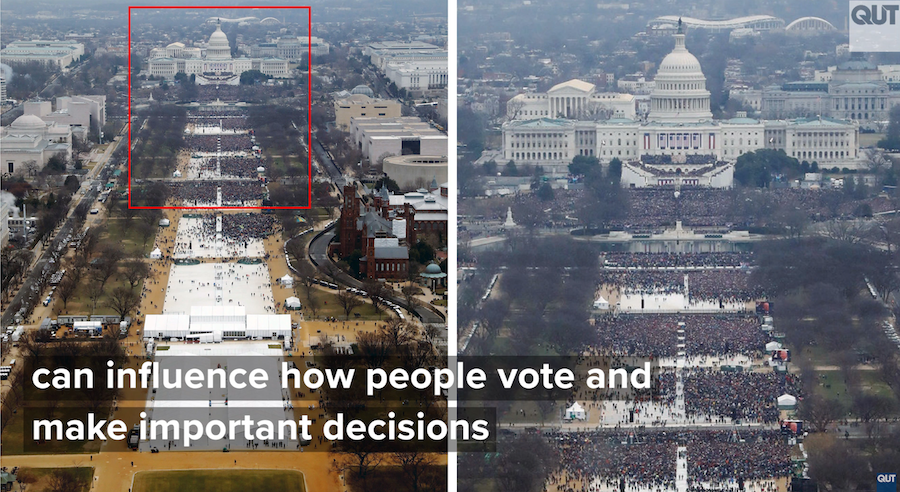

Image editing software is so ubiquitous and easy to use, according to researchers from QUT’s Digital Media Research Centre, it has the power to re-imagine history. And, they say, deadline-driven journalists lack the tools to tell the difference, especially when the images come through from social media.

Their study, Visual mis/disinformation in journalism and public communications, has been published in Journalism Practice. It was driven by the increased prevalence of fake news and how social media platforms and news organisations are struggling to identify and combat visual mis/disinformation presented to their audiences.

‘When Donald Trump’s staff posted an image to his official Facebook page in 2019, journalists were able to spot the photoshopped edits to the president’s skin and physique because an unedited version exists on the White House’s official Flickr feed,’ said lead author Dr T.J. Thomson.

![]()

‘But what about when unedited versions aren’t available online and journalists can’t rely on simple reverse-image searches to verify whether an image is real or has been manipulated?

‘When it is possible to alter past and present images, by methods like cloning, splicing, cropping, re-touching or re-sampling, we face the danger of a re-written history – a very Orwellian scenario,’ Thomson continued.

Examples highlighted in the report include photos shared by news outlets last year of crocodiles on Townsville streets during a flood which were later shown to be images of alligators in Florida from 2014. It also quotes a Reuters employee on their discovery that a harrowing video shared during Cyclone Idai, which devastated parts of Africa in 2019, had been shot in Libya five years earlier.

Image courtesy QUT Digital Media Research Centre.

An image of Dr Martin Luther King, Jr.’s reaction to the US Senate’s passing of the civil rights bill in 1964, was manipulated to make it appear that he was flipping the bird to the camera. This edited version was shared widely on Twitter, Reddit, and white supremacist website The Daily Stormer.

Dr Thomson, Associate Professor Daniel Angus, Dr Paula Dootson, Dr Edward Hurcombe, and Adam Smith have mapped journalists’ current social media verification techniques and suggest which tools are most effective for which circumstances.

‘Detection of false images is made harder by the number of visuals created daily – in excess of 3.2 billion photos and 720,000 hours of video – along with the speed at which they are produced, published, and shared,”’said Dr Thomson.

‘Other considerations include the digital and visual literacy of those who see them. Yet being able to detect fraudulent edits masquerading as reality is critically important.

‘While journalists who create visual media are not immune to ethical breaches, the practice of incorporating more user-generated and crowd-sourced visual content into news reports is growing. Verification on social media will have to increase commensurately if we wish to improve trust in institutions and strengthen our democracy.’

Dr Thomson said a recent quantitative study performed by the International Centre for Journalists (ICFJ) found a very low usage of social media verification tools in newsrooms.

‘The ICFJ surveyed over 2,700 journalists and newsroom managers in more than 130 countries and found only 11% of those surveyed used social media verification tools,’ he said.

‘The lack of user-friendly forensic tools available and low levels of digital media literacy, combined, are chief barriers to those seeking to stem the tide of visual mis/disinformation online.’

Associate Professor Angus said the study demonstrated an urgent need for better tools, developed with journalists, to provide greater clarity around the provenance and authenticity of images and other media.

‘Despite knowing little about the provenance and veracity of the visual content they encounter, journalists have to quickly determine whether to re-publish or amplify this content,’ he said.

‘The many examples of misattributed, doctored, and faked imagery attest to the importance of accuracy, transparency, and trust in the arena of public discourse. People generally vote and make decisions based on information they receive via friends and family, politicians, organisations, and journalists,’Angus continued.

The researchers cite current manual detection strategies – using a reverse image search, examining image metadata, examining light and shadows; and using image editing software – but say more tools need to be developed, including more advanced machine learning methods, to verify visuals on social media.

This article was originally published by QUT Media. The report was co-authored by Dr Thomson, Associate Professor Daniel Angus, Dr Paula Dootson, Dr Edward Hurcombe, and Adam Smith.